Getting "a kube"

# Getting "a kube"

Before we get started we need a Kubernetes cluster to use! Several cloud providers offer Kubernetes as a service, but we're going to use kind (opens new window) to create a local cluster to play with first. If you're interested in deploying a multi server solution we'll look into the official Kubernetes distribution kubeadm (opens new window) later.

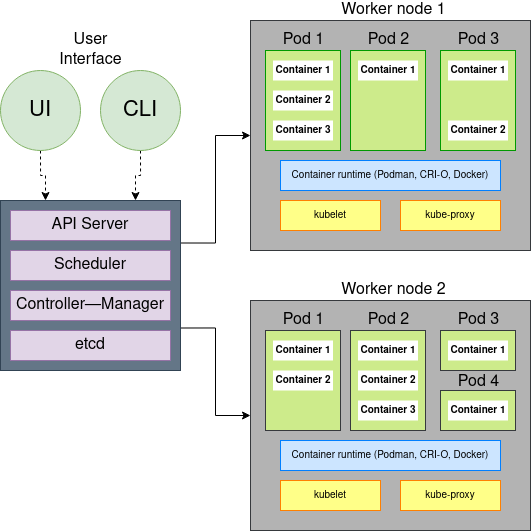

# The k8s cluster

A k8s cluster is a group of machines, each machine is called a node. Nodes can have different roles in k8s:

- "Controller" or "master" nodes that run the Kubernetes API and the control plane

- You can run multiple controllers (in uneven numbers) to have a highly available cluster

- One or more "worker" node that run the actual workloads (= pods with containers).

(Nived Velayudhan, CC BY-SA 4.0)

# The Laptop Essentials: kubectl

Before we can start we need to install kubectl which is the command line tool to interact with Kubernetes. You can find the installation instructions on the official website (opens new window).

You can compare it to the systemctl to systemd but for Kubernetes. Some people also see it as a kind of SSH alternative as many developers will use it on their local machine to interact with a remote cluster.

In our class we will use kubectl to interact with our local cluster on our Linux VM (as the Linux bash shell is just way beter![1]).

# Install kubectl

sudo apt-get update

sudo apt-get install -y ca-certificates curl

sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubectl

2

3

4

5

6

7

When you do kubectl version you should see the version of your client.

Tips

You can have a cheat sheet (opens new window) on the official website.

# The kubeconfig

The kubeconfig is a file that contains all the information to connect to a Kubernetes cluster. It is a YAML file that contains all the information to connect to one or multiple cluster. It is often shared between developers to get cluster access but should be considered as confidential as a password.

You can find it at ~/.kube/config on macOS/Linux and C:\Users\%USERNAME%\.kube\config on Windows.

# kind - the developer's friend

kind (opens new window) is a tool to run local Kubernetes clusters using Docker containers. It is a great tool for developers as it is fast and lightweight compared to other solutions. It is an alternative to minikube (opens new window) which uses VMs under the hood so will be slower and more resource intensitive.

You can install it with the following command:

curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.16.0/kind-linux-amd64

chmod +x ./kind

sudo mv ./kind /usr/local/bin/kind

2

3

If you need a cluster it is as easy as running kind create cluster and you will have a cluster (containing only one node) running in a few seconds. You can then interact with it with kubectl as you would with any other cluster, the credentials are automatically configured.

Check it out with kubectl get nodes:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

kind-control-plane Ready control-plane,master 10s v1.22.2

2

3

When you are done you can delete the cluster with kind delete cluster.

When we use kind in class it is a good idea to enable ingress support so that we can use HTTP/HTTPS routing. First, create a kind configuration file and then load it while creating the kind cluster:

$ cat kindconfig

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: "ingress-ready=true"

extraPortMappings:

- containerPort: 80

hostPort: 80

protocol: TCP

- containerPort: 443

hostPort: 443

protocol: TCP

$ kind create cluster --config=kindconfig

Creating cluster "kind" ...

✓ Ensuring node image (kindest/node:v1.25.2) 🖼

✓ Preparing nodes 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-kind"

You can now use your cluster with:

kubectl cluster-info --context kind-kind

Thanks for using kind! 😊

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

Want to create a multi-node cluster? Then you can use the following kind configuration file, which sets up 1 controller node and 2 worker nodes:

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: "ingress-ready=true"

extraPortMappings:

- containerPort: 80

hostPort: 80

protocol: TCP

- containerPort: 443

hostPort: 443

protocol: TCP

- role: worker

- role: worker

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

# Ingress

kind doesn't come with an ingress controller out of the box. We will use ingress-nginx (opens new window) which is the most popular ingress controller for Kubernetes.

# Now let's install our ingress controller component

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/provider/kind/deploy.yaml

kubectl get pod --all-namespaces # watch to see them all starting

2

3

# Storage

kind has a built in local storage provider, we will use that to make Presistent Volumes available in our cluster.

# kubeadm - the production ready official way

Note

kubeadm is mentioned to be complete but is out of scope for what we will do inside our classes. It can be used in your assignment as bonus points.

kubeadm (opens new window) is the community supported way to install Kubernetes. It is a great tool to install a production-ready Kubernetes cluster. It is a bit more complex to use than kind but it will get you started with a real cluster in no time.

To set it up you need:

- One "controller" node that has the Kubernetes API and the control plane

- You can run multiple controllers (in uneven numbers) to have a highly available cluster

- One or more "worker" node that run the actual workloads

# Prepare the servers

Before we get started we need to prepare our Ubuntu servers to run Kubernetes and have containerd.

# load needed network kernel modules

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# Setup required sysctl params, these persist across reboots.

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

# Apply sysctl params without reboot

sudo sysctl --system

# Install containerd (the container runtime)

sudo apt-get update && sudo apt-get install -y containerd

sudo mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml

sudo systemctl restart containerd

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

# The controller

The controller is the server that runs the Kubernetes API and the control plane. It is the server that you will connect to when you use kubectl.

You can install it with the following command:

sudo apt-get update && sudo apt-get install -y apt-transport-https ca-certificates curl

curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl # prevent auto-updates

2

3

4

5

6

You can then initialize the cluster with the following command:

kubeadm init --pod-network-cidr=172.16.0.0/12

This command will set up the cluster and print a command to run on the worker nodes to join the cluster.

# The workers

To set up a worker node you need a set up token from the controller. You can get it with the following command:

kubeadm token create --print-join-command

This will output a command that you can run on the worker nodes to join the cluster.

But first you need to install the same packages as on the controller:

sudo apt-get update && sudo apt-get install -y apt-transport-https ca-certificates curl

curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl # prevent auto-updates

2

3

4

5

6

When you have the command from the controller you can run it on the worker nodes to join the cluster.

You can check the status of the cluster with kubectl get nodes.

# Batteries not included

Kubeadm uses a very minimal set of components to run Kubernetes, this is so it is not opinionated on tools that are not part of the Kubernetes community. You will need to install additional components to get a full featured cluster.

# Networking

This is an essential_ component of Kubernetes. Without a networking solution you will not be able to communicate between pods or let alone start them.

There are many networking solutions available, the most popular one is Calico (opens new window). You can install it with the following command:

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

Calico is responsible for building an overlay network between the nodes in your cluster. It will also assign IP addresses to the pods and services in your cluster on this network. This network is only accessible from within the cluster.

# Optional: LoadBalancer Services

If you want to expose your services ports to the outside world using a "floating IP" you will need a LoadBalancer. This is a component that will expose your services to the outside world. Your cloud provider will often have it's own LoadBalancer, but if you are running on bare metal you will need to install one yourself like MetalLB (opens new window).

When installing MetalLB you will need to configure it with a range of IP addresses that it can use, once in use it will start answering ARP requests for these IP addresses and forward the traffic to the correct service.

# Optional: Storage

Storage is yet another service that is typically provided by your cloud provider. Open source self-hosted solutions like OpenEBS (opens new window) provide a way to run your own storage solution. It offers many options with different performance and redundancy ranges.

Unless you set up a production environment I recommend to try Local PV Hostpath (opens new window) which will create a directory on the host and use that as a storage solution. This is not a production ready solution as it will assign it to only one server, but it is easy to set up and will work for most use cases.

# Ingress

Just like before with kind, we need our own ingress controller. The most popular one is NGINX Ingress Controller (opens new window).

However when doing this you should consider several load balancing options... Everything you need is described in the Bare-metal considerations guide (opens new window).

# The local multi VM cluster? Vagrant of course!

Want to get going with a multi server Kubernetes cluster on your laptop? Remember Vagrant from the first class of the year? Yup there is a Vagrant setup for Kubernetes too! You can find it at github.com/cloudnativehero/kubeadm-vagrant (opens new window)

# Want to become a real PRO?

(Do not do this... like really don't do this unless you do this for a living)

So you want to brag to your friends you know Kubernetes really, really well? Go set up a cluster using Kelsey Hightower's Kubernetes The Hard Way (opens new window).

Eyskens, Mariën. (2022). Bash vs. PowerShell. B300 fights. ↩︎